RFDiffusion: Accurate protein design using structure prediction and diffusion generative models

Tuesday February 14th, 4-5 pm EST | Joe Watson & David Juergens There has been considerable recent progress in designing new proteins using deep learning methods. Despite this progress, a general deep learning framework for protein design that enables solution of a wide range of design challenges, including de novo binder design and design of higher order symmetric architectures, has yet to be described. Diffusion models10,11 have had considerable success in image and language generative modeling but limited success when applied to protein modeling, likely due to the complexity of protein backbone geometry and sequence-structure relationships. Here we show that by fine tuning the RoseTTAFold structure prediction network on protein structure denoising tasks, we obtain a generative model of protein backbones that achieves outstanding performance on unconditional and topology-constrained protein monomer design, protein binder design, symmetric oligomer design, enzyme active site scaffolding, and symmetric motif scaffolding for therapeutic and metal-binding protein design. We demonstrate the power and generality of the method, called RoseTTAFold Diffusion (RFdiffusion), by experimentally characterizing the structures and functions of hundreds of new designs. In a manner analogous to networks which produce images from user-specified inputs, RFdiffusion enables the design of diverse, complex, functional proteins from simple molecular specifications. Preprint: https://www.biorxiv.org/content/10.1101/2022.12.09.519842v2

RFDiffusion: Accurate protein design using structure prediction and diffusion generative models

Introduction to Protein Design

In this section, Joe Watson and Dana Jurgens introduce themselves and their work on protein design. They highlight the importance of developing generative models for protein design and the use of deep learning in advancing our ability to computationally redesign molecules with desired chemical properties.

Introducing Joe Watson and Dana Jurgens

- Joe Watson is an EMBO post-op fellow at The Institute for Protein Design, University of Washington.

- Dana Jurgens is a graduate student in the Baker Group at the University of Washington.

Importance of Protein Design

- Nature has explored only a small fraction of possible proteins, and evolution does not necessarily select for attributes desired in biotechnological or clinical settings.

- De novo protein design aims to derive new proteins with desired functions and attributes.

- Computational tools have advanced significantly in sequence design, structure prediction, and experimental characterization.

Workflow of Protein Design

This section discusses the workflow involved in protein design, particularly focusing on the structure-first approach followed by the Baker Lab. It highlights the four key steps involved in designing a protein backbone, deriving a sequence, computational filtering, and experimental validation.

Structure-First Approach

- The Baker Lab follows a structure-first approach to protein design.

- Four key steps are involved:

- Generation of a backbone structure capable of carrying out a specific function.

- Derivation of a sequence that can fold up to the designed backbone structure.

- Computational filtering using trusted metrics to narrow down designs for experimental testing.

- Experimental characterization and validation.

Advances in Protein Design

- ML applications have led to significant advancements in sequence design (protein npn) and structure prediction (AlphaFold2, RosettaFold).

- Experimental characterization has also improved, allowing for the validation of designed proteins in a short timeframe.

Developing Deep Learning Methods for Protein Backbones

This section focuses on the development of deep learning-based methods for designing protein backbones. The goal is to overcome the bottleneck in backbone generation and enhance the overall workflow of protein design.

Importance of Backbone Generation

- Backbone generation is a critical step in protein design.

- Current methods often rely on manual or template-based approaches, limiting design possibilities.

Deep Learning-Based Methods

- The team aims to develop new deep learning-based methods for designing protein backbones.

- Leveraging advances in generative AI and molecular simulation, they seek to enhance computational models for backbone generation.

Conclusion

In this presentation, Joe Watson and Dana Jurgens discuss their work on protein design, highlighting the importance of developing generative models and utilizing deep learning techniques. They emphasize the structure-first approach followed by the Baker Lab and outline the key steps involved in protein design. Additionally, they express their focus on developing deep learning methods for backbone generation to overcome current limitations.

New Section

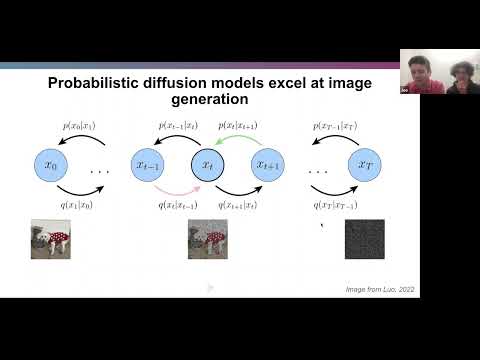

This section explains the concept of training a diffusion model on protein backbones and the benefits it offers for protein design.

Training a Diffusion Model on Protein Backbones

- The process involves adding increasing amounts of noise to an image or starting data over a set number of time steps.

- Intermediate time steps result in grainy images that still bear resemblance to the starting image.

- Eventually, a final time step is reached where the distribution becomes independent of the starting image.

- Gaussian noise is typically used as the noise added at each time step.

- Repeated application of Gaussian noise leads to convergence towards a known distribution.

- A neural network is trained to undo this noise and restore the original image or data.

- Successful training allows sampling from the known distribution by feeding pure noise into the model and denoising it iteratively.

New Section

This section discusses why training a diffusion model on protein backbones presents new challenges compared to training on images.

Challenges in Training on Protein Backbones

- Protein backbones have strong constraints, including four heavy atoms (N, Cα, C, O) connected by three covalent bonds.

- The backbone must form a continuous chain and have an encodable sequence.

- Not all backbones can be encoded by an amino acid sequence, posing challenges for training a diffusion model on protein structures.

New Section

This section explores the representation of protein backbones using frame-based representation for training generative models.

Frame-Based Representation for Protein Backbones

- Alpha fold and Rosetta fold use a frame-based representation for backbone residues.

- The geometry of NC Alpha and C Alpha C bonds in protein backbones is highly constrained.

- The position of oxygen can be derived from NC Alpha C frames.

- This frame-based representation simplifies the training of generative models on protein backbones.

New Section

This section highlights the benefits of training a diffusion model on protein backbones for protein design.

Benefits of Training on Protein Backbones

- Training on protein backbones allows for generating endlessly diverse outputs by feeding in pure noise and adding random noise at each denoising step.

- The direct operation on amino acid coordinates is advantageous for certain applications.

- Diffusion models can condition on a wide range of inputs and be guided by external auxiliary potentials, enabling high-resolution features and approximate fold information to guide generation.

- These factors motivated the training of a diffusion model on protein backbones for protein design.

New Section

This section discusses the challenges of representing protein backbones in three dimensions and training a diffusion model on them. It also explains the process of adding noise to protein structures by applying 3D Gaussian noise to translation and rotation coordinates.

Representing Protein Backbones and Adding Noise

- Protein backbones are represented in three dimensions, requiring consideration of translation and rotation.

- Diffusion models are trained on protein backbones by adding noise to both the position (translation) and orientation (rotation) of frames.

- 3D Gaussian noise is applied to C Alpha coordinates for translation, while Brownian motion is used for rotations on the manifold of rotation matrices (SO3).

Reverse Generative Process and Equivalent Predictions

- Diffusion models have a reverse generative process that involves removing one step of noise at a time.

- There is an equivalence between predicting one step of noise removal or directly predicting the ground truth protein structure.

- This equivalence motivated the approach taken in this study, where a structured prediction network (Rosetta fold) was fine-tuned as a denoising network in a diffusion model.

RF Diffusion Architecture and Training Strategy

- The architecture of Rosetta fold resembles that required for a denoising network, making it suitable for fine-tuning into a generative model called RF diffusion.

- In RF diffusion, Rosetta fold is trained on masked input sequences and noised input coordinates to predict ground truth protein structures.

- The training is performed on most of the Protein Data Bank with proteins less than 384 amino acids, clustered by sequence similarity.

- Noise is applied over 200 time steps to achieve random 3D Gaussian distribution of C Alpha coordinates and uniform distribution of rotations.

Self-conditioning and Fine-tuning from a Structured Prediction Network

- Self-conditioning, inspired by other diffusion literature, involves giving the model access to its previous prediction to improve subsequent predictions.

- This concept is similar to recycling in AlphaFold and Rosetta fold, where multiple attempts are made by the structure prediction network.

- RF diffusion incorporates self-conditioning to enhance the generative process.

- Fine-tuning from a structured prediction network like Rosetta fold provides an efficient basis for training RF diffusion.

New Section

This section provides an overview of the training details and key features of RF diffusion, including the use of self-conditioning and fine-tuning from a structured prediction network.

Training Details and Dataset

- RF diffusion is trained on the Protein Data Bank, specifically proteins less than 384 amino acids without cropping.

- The training utilizes 200 time steps for noise application, resulting in a random 3D Gaussian distribution of C Alpha coordinates and uniform rotations.

- The model is trained on eight A100s for approximately four days, considering its size of about 80 million parameters.

Self-conditioning and Fine-tuning

- Self-conditioning is incorporated into RF diffusion based on previous research showing that accessing previous predictions improves subsequent ones.

- Fine-tuning from a structured prediction network like Rosetta fold serves as an effective foundation for training RF diffusion.

New Section

In this section, the speaker discusses the process of generating X naught hat and feeding it into the model along with coordinate inputs. The model leverages both the current position of coordinates and previous time step predictions to improve RF diffusion.

Generating X naught hat and Model Input

- X naught hat is generated and fed into the model along with coordinate input at time step T.

- The model utilizes both the current position of coordinates and its previous time step predictions to make its next prediction.

- This approach significantly improves RF diffusion.

New Section

This section focuses on benchmarking the performance of RF diffusion on a mixed benchmark set, including unconditional generations and functional motif scaffolding problems.

Benchmarking Performance of RF Diffusion

- The performance of RF diffusion is benchmarked on a mixed benchmark set.

- The set includes unconditional generations where any protein can be created, as well as functional motif scaffolding problems where a scaffold needs to be built to support a specific protein segment.

- RMSD between Alpha fold and design is used to measure recapitulation, with lower values indicating better performance.

- Alpha fold confidence, which represents how confident Alpha fold is in its prediction, is also considered.

- Self-conditioning by allowing RF diffusion to see its previous time step predictions significantly improves performance in both unconditional generation and functional motif scaffolding tasks.

New Section

This section discusses fine-tuning a structure prediction network for RF diffusion using pre-trained weights from Rosetta Fold. A comparison is made between fine-tuned models and randomly initialized models.

Fine-Tuning with Pre-Trained Weights

- The approach involves fine-tuning a structure prediction network using pre-trained weights from Rosetta Fold.

- A control experiment is conducted by training RF diffusion with randomly initialized weights in Rosetta Fold and diffusion.

- The results show that fine-tuning from pre-trained weights significantly outperforms randomly initialized models in terms of performance across various tasks.

- Leveraging the inductive bias of a structure prediction network improves the quality of generated proteins.

New Section

This section showcases the output structures generated by RF diffusion under different conditions, including without pre-training, with pre-training but no self-conditioning, and with both pre-training and self-conditioning.

Output Structures of RF Diffusion

- Without pre-training, the model struggles to learn and generate protein-like structures.

- With pre-training but no self-conditioning, the generated proteins resemble proteins to some extent but lack proper packing and diversity.

- When both pre-training and self-conditioning are employed, RF diffusion produces high-quality protein structures that are well-packed, exhibit mixed topology, and are visually appealing.

New Section

In this section, the speaker discusses experimental verification of proteins generated by RF diffusion. Designs from various problems are tested to evaluate the model's performance.

Experimental Verification

- Proteins designed by RF diffusion are experimentally verified.

- Designs from problems requiring limited backbone generation are selected for testing.

- The initial focus is on unconditional backbone generation to assess how well the model can create protein domains that fold nicely.

- Results show that even for long proteins up to 400 amino acids, RF diffusion can generate highly designable structures that can be accurately recapitulated by Alpha fold.

Benchmarking Against Rosetta Fold Hallucination

In this section, the speaker discusses benchmarking the AlphaFold method against another state-of-the-art method called Rosetta Fold Hallucination. The comparison is made in terms of generating backbones for proteins.

Benchmark Results

- AlphaFold outperforms Rosetta Fold Hallucination in both short and long protein regimes.

- AlphaFold shows lower RMSD (Root Mean Square Deviation) values compared to designs generated by RF diffusion.

- AlphaFold scales better than Rosetta Fold Hallucination.

Verifying Generative Models

This section focuses on verifying that generative models like AlphaFold are not just memorizing the dataset but actually modeling the desired distribution.

TM Score Analysis

- TM score is used as a similarity metric to check if RF diffusion backbones are similar to the training set in the Protein Data Bank (PDB).

- RF diffusion backbones show very little similarity to anything they were trained on in the PDB, indicating that they are modeling new proteins.

Properties of Unconditional Proteins

Here, the speaker explores whether unconditional proteins designed by AlphaFold exhibit desirable properties such as expression, thermostability, and folding behavior.

Experimental Testing

- Unconditional 300 amino acid designs were expressed in E. coli and their CD spectra were analyzed.

- The designs expressed at high levels and exhibited well-folded structures according to CD spectra.

- The designs showed resistance to melting even when boiled, indicating good stability.

Scaffold Libraries for Fold Families

This section discusses the ability of AlphaFold to generate scaffold libraries for specific fold families, which is useful for designing small molecule binders or enzymes.

Input Information

- Two pieces of information are inputted to the network: block adjacency tensor and secondary structure encoding.

- These inputs allow the model to condition its predictions on specific fold families.

Results

- AlphaFold successfully generates designs that recapitulate specific fold families, such as Tim Barrels and NTF2s.

- The in-silico success rate for generating orderable proteins from a fold family is around 40 to 50 percent, indicating high efficiency.

Conclusion

In this presentation, the speaker showcases the capabilities of AlphaFold in generating protein backbones. The method outperforms Rosetta Fold Hallucination, models new proteins instead of memorizing the dataset, exhibits desirable properties in unconditional designs, and enables the generation of scaffold libraries for specific fold families. These findings highlight the potential of AlphaFold in protein design and engineering.

New Section

This section discusses the use of RF diffusion for generating proteins with point symmetry and its advantages over deep learning methods.

Generating Proteins with Point Symmetry

- RF diffusion takes an asymmetric unit's worth of residues from a Gaussian distribution and applies all symmetry operators to generate proteins with point symmetry.

- The network equivalence ensures that the prediction closely respects the point symmetry.

- The process is repeated, gradually reducing noise, until a protein that perfectly respects the point symmetry is obtained.

- This method allows for symmetric sequence design for oligomers, leading to high success rates in silico.

- Dihedral symmetries can be achieved, which was previously challenging with other methods.

New Section

This section highlights the successful experimental verification of designed proteins using negative stain electron microscopy.

Experimental Verification of Designed Proteins

- Proteins designed using RF diffusion were purified and experimentally verified using negative stain electron microscopy.

- The backbone structure of the designed proteins closely matched predictions from RF diffusion and AlphaFold.

- Cyclic and dihedral symmetries were confirmed through 2D class averages and 3D reconstructions.

- Icosahedral symmetries were also achieved by modeling minimal subunits and interfaces in an icosahedron.

- Experimental results showed that the designed particles matched the desired structures accurately.

New Section

This section discusses the generation of functional motif scaffolding using RF diffusion and its superior performance compared to other methods.

Functional Motif Scaffolding

- RF diffusion was tested for the task of functional motif scaffolding and outperformed Rosetta fold in painting and hallucination methods.

- RF diffusion achieved high success rates in silico for a wide range of motif scaffolding problems.

- The designed backbones closely matched the motifs, even when other methods struggled to find solutions.

- Successful motif scaffolding indicates potential functionality in wet lab experiments.

The transcript is already in English, so there is no need to respond in a different language.

Motif Scaffolding and Therapeutics

In this section, the speaker discusses the use of motif scaffolding in the context of therapeutics. They highlight the discovery of tight binders for p53 helix and symmetric motif scaffolding for metal binding domains.

Motif Scaffolding for p53 Helix Binding

- More than half of the tested designs bound with at least the affinity of p53 helix.

- Some designs were identified as super tight binders, with less than one nanomolar binding affinity.

- These findings suggest that these designs could be potential candidates for therapeutics in cancer therapy.

Symmetric Motif Scaffolding

- Symmetric motif scaffolding can be useful to address naturally occurring symmetries in functional proteins.

- The goal is to scaffold symmetric proteins that present functional motifs.

- A metal binding case was chosen to explore this area, specifically scaffolding a nickel binding site using four histidine residues attached to ideal helices.

- Through combining motif scaffolding protocol with symmetric oligomer generation protocol, well-packed oligomers that nicely thread through the motif can be generated.

Designing Oligomers for Nickel Binding

This section focuses on designing oligomers for nickel binding using motif scaffolding and symmetric oligomer generation protocols.

Generating Trajectories

- By combining motif scaffolding protocol with symmetric oligomer generation protocol, trajectories were generated to find well-packed oligomers that also thread through the desired motif.

- Multiple diverse backbones were observed in silico, indicating a variety of potential solutions to the problem.

Experimental Validation

- 48 different designs were ordered and approximately 30-40% showed success in binding nickel ions.

- Negative stain electron microscopy helped verify their ligameric structure and symmetry.

- Mutating away histidines from the oligomers resulted in complete abolishing of the binding signal, confirming the role of histidines in nickel binding.

Protein Binder Design for Therapeutics

This section discusses protein binder design for therapeutics and diagnostics, focusing on creating proteins that can bind to target proteins or peptides.

Multi-Chain Motif Scaffolding

- Protein binder design is modeled as a multi-chain motif scaffolding problem.

- The target protein or peptide is considered as the motif, while the model designs a second chain that packs well against it.

- This approach showed promising results with an improved experimental success rate compared to other methods.

Experimental Success

- The new protocol demonstrated an order of magnitude improvement in experimental success against therapeutically relevant targets.

- Tens of percents of successes were achieved experimentally with a batch of 10,000 designs, which was previously unheard of.

- Strongest hits for each target showed diverse binding interactions and docking folds.

Cryo-EM Validation and Peptide Binding

This section highlights cryo-electron microscopy validation and peptide binding using RF diffusion.

Cryo-EM Validation

- A cryo-electron microscopy structure was solved for a flu binder against flu hemoglobin A.

- The RF diffusion-produced binder closely matched the atomic structure, validating the accuracy of structures generated by RF diffusion.

Peptide Binding

- RF diffusion was used to make binders to therapeutically or diagnostically relevant peptides.

- The protocol involved inputting the peptide structure into the network and designing a fold around it.

- Efficient design campaigns for binders were achieved with high success rates experimentally.

Due to limitations in available timestamps, some sections may not have specific timestamps associated with them.

Protein Backbone Generation with RF-Diffusion

In this section, the speaker discusses the advancements in protein backbone generation using RF-Diffusion and its superior performance compared to previous methods.

Advancements in Protein Backbone Generation

- The speaker highlights that RF-Diffusion has shown a significant improvement in generating protein backbones without any optimization in the wet lab.

- This method outperforms previous computational and experimental methods across a wide range of tasks.

- RF-Diffusion leverages advances in protein structure prediction, including data representation, training strategies, and even weights.

Continuous Improvement

- The speaker mentions ongoing efforts to further improve the model through sampling and training techniques.

- A new model has been discovered that performs even better than the previous version on benchmarks.

- The code for the model will be released along with the publication of the paper.

Timestamps are provided for each bullet point to help locate specific parts of the video.