NSDI '13 - Scaling Memcache at Facebook

Scaling Memcache at Facebook Rajesh Nishtala, Hans Fugal, Steven Grimm, Marc Kwiatkowski, Herman Lee, Harry C. Li, Ryan McElroy, Mike Paleczny, Daniel Peek, Paul Saab, David Stafford, Tony Tung, and Venkateshwaran Venkataramani, Facebook Inc. Memcached is a well known, simple, in memory caching solution. This paper describes how Facebook leverages memcached as a building block to construct and scale a distributed key-value store that supports the world’s largest social network. Our system handles billions of requests per second and holds trillions of items to deliver a rich experience for over a billion users around the world. View the full NSDI '13 program at https://www.usenix.org/conference/nsdi13/technical-sessions

NSDI '13 - Scaling Memcache at Facebook

Scaling Memcache at Facebook

Introduction to Scaling Challenges

- Rajesh Installa introduces the topic of scaling Memcache, emphasizing that it was a collaborative effort and not solely his work.

- He outlines Facebook's infrastructure requirements, highlighting the need for near real-time communication and content aggregation from multiple sources.

Design Requirements for Scalability

- The system must handle over a billion read requests per second while storing trillions of items in cache.

- Geographic distribution is essential due to data centers worldwide, alongside support for rapid deployment of evolving products.

Role of Memcached

- Memcached serves as a distributed key-value store, functioning as an in-memory hash table to manage heavy read loads effectively.

- The talk will cover the architecture involving web servers, databases, and how adding Memcache servers addresses specific challenges like read-heavy workloads.

Data Dependency and Caching Strategy

- Before implementing Memcache, data was stored across several databases based on user IDs; this setup sufficed initially but became inadequate as demands grew.

- A complex data dependency graph illustrates the numerous fetch operations required even for small user requests, indicating that traditional databases alone cannot meet performance needs.

Implementing Demand-Fill Lookaside Cache

- With tens of Memcache servers deployed, they operate under a demand-fill lookaside caching model where cache misses trigger database queries to refill the cache.

- Updates are written directly to the database with subsequent invalidation of cached values through deletes rather than direct updates for idempotency reasons.

Addressing Stale Data Issues

- The potential issue of stale sets arises when different web servers access outdated cached values leading to inconsistencies between cache and database states.

Scaling Web Servers and Database Access

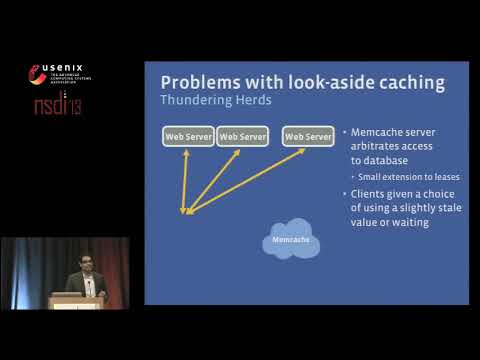

The Challenge of Thundering Herds

- A scenario is described where multiple web servers access a popular piece of shared content simultaneously, leading to database overload.

- When the database value is updated, all servers attempt to access it at once, causing the database to crash and resulting in a poor user experience.

Caching Solutions

- To mitigate this issue, an extension to leases allows memcache servers to manage access to databases by holding off requests until data is refilled.

- As operations scale up with hundreds of servers, more memcache servers are added. Data distribution uses consistent hashing for efficient cache management.

Network Strain and Congestion

- All-to-all communication among web servers leads to network strain as they simultaneously query cache servers.

- This results in "incast congestion," where packet drops occur due to overwhelming shared networking resources on the client side.

Sliding Window Protocol

- A sliding window protocol limits outstanding messages sent over the network; larger windows increase congestion while smaller ones require more round trips.

Scaling Beyond Clusters

- As operations reach thousands of servers and hundreds of millions per second, naive scaling exacerbates all-to-all problems.

- Introducing multiple clusters helps manage scalability but raises challenges regarding cache consistency and data over-replication.

Database Management with mcsql

- The system utilizes MySQL for database storage with mcsql managing transactions and invalidating outdated cache items post-operation.

- This ensures that upon a cache miss, users receive fresh data rather than stale information.

Optimizing Packet Flow

- To reduce packet flow issues caused by high traffic between front-end clusters, memcache routers (mcrouter) are implemented for efficient broadcasting of invalidations.

- This setup minimizes inter-cluster bandwidth usage compared to intra-cluster bandwidth, achieving an 18x reduction in packet density.

Geographically Distributed Data Centers

Data Center Architecture and Management

Overview of Data Centers

- The speaker discusses the global distribution of their data centers, specifically mentioning locations in North Carolina, Oregon, and Lulia, Sweden.

Database Structure and Write Handling

- The system utilizes a single master database with replicas in other regions to manage geographically distributed databases.

- Writes are directed to the master database via web servers making cross-country connections; this is feasible due to a read-dominant workload where reads outnumber writes significantly.

Consistency Challenges and Solutions

- MySQL replication is used to transfer data from the master to replicas; however, this can lead to potential inconsistencies if multiple web servers attempt to read simultaneously.

- To mitigate inconsistency risks, "remote markers" are employed as flags indicating whether a race condition may occur during data access.

Cache Management Strategy

- When initiating a write operation, a remote marker is set in the cache. If present during reads, it indicates that replication has not completed yet.

- If the marker exists, data is read directly from the master; otherwise, it’s retrieved from local replicas. This strategy helps maintain consistency despite being read-heavy.

System Evolution and Lessons Learned

- The architecture evolved from few web servers and databases to multiple frontend clusters while addressing challenges like read-heavy workloads and failure management.

- Managing data replication and consistency became crucial as they scaled operations across various regions.

Operational Efficiency Insights

- A key lesson learned was pushing complexity into clients rather than server-to-server communication which enhances operational capabilities.

- Emphasizing operational efficiency alongside performance led to improved configuration management despite slower performance through multi-step routing processes.

Independent Scaling of Components

- Separating caching mechanisms from persistent storage allows for independent scaling: caches handle high read rates while databases manage updates and storage capacity effectively.

Addressing Bottlenecks in Memcache

Discussion on Memcache Server Limitations

- The speaker acknowledges that bottlenecks within individual memcache servers were not discussed but are significant when considering all memcache servers communicating with web servers simultaneously.

Provisioning Strategies for Performance

- Effective provisioning must account for both average cases and worst-case scenarios due to potential spikes in demand affecting throughput significantly.

Future Considerations for Storage Technologies

Exploring Flash Storage Options

Memcached: Key Insights and Applications

Importance of In-Memory Key-Value Stores

- The effectiveness of memcached as a fast and efficient in-memory key-value store is critical for serving the needs of high-demand sites.

- There are no compelling reasons to replace memcached, as it continues to perform well in its role within system architecture.

Requirements for Caching Systems

- A fundamental requirement for caching systems like memcached is the ability to fetch numerous small data items quickly, addressing what Facebook refers to as a "small data problem."

- Handling billions of operations efficiently is essential; memcache significantly aids in managing these high rates of data retrieval.

Cache Size and Utilization

- Discussion on cache size reveals that items can remain in the cache for varying durations based on their usage patterns, with some lasting only hours or days due to high churn workloads.

- It's crucial to separate persistent storage from caching layers; caches should primarily hold frequently accessed "hot" data rather than all database content.

Data Virality and Cache Efficiency

- The virality of content affects cache utilization; not all items will be equally popular or frequently accessed, impacting how long they stay cached.